She Who Must Not Be Named

There’s no “sharing” your name with an AI device…

What happens when tech devices that are everywhere are programmed to activate by the sound of a real human name? It becomes almost impossible for people who have that name (and similar names) to be addressed, or even mentioned, without triggering the virtual assistant to respond and interrupt whatever activity is taking place.

So-called Smart Speakers:

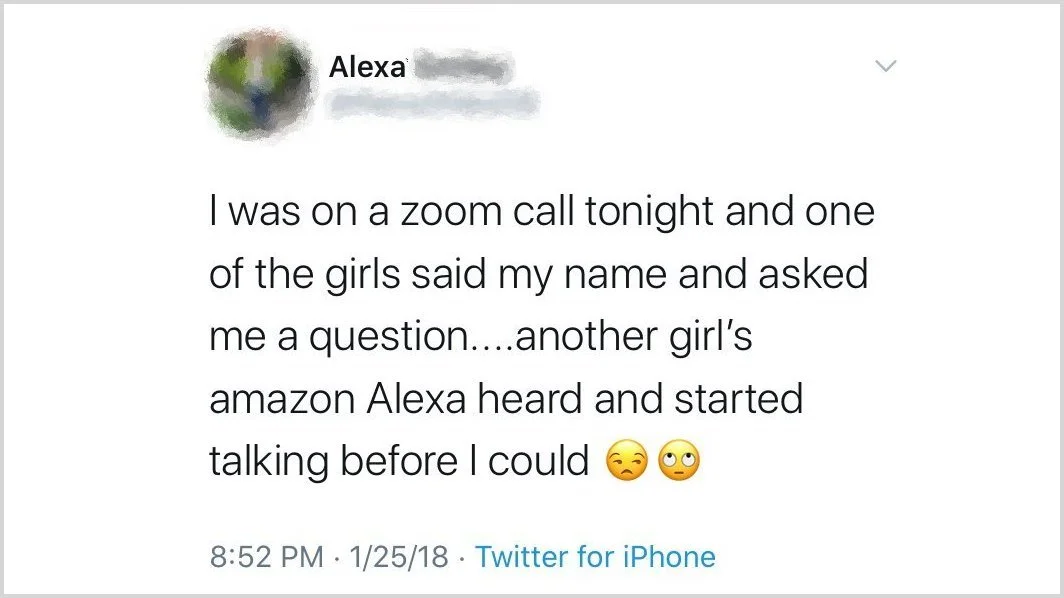

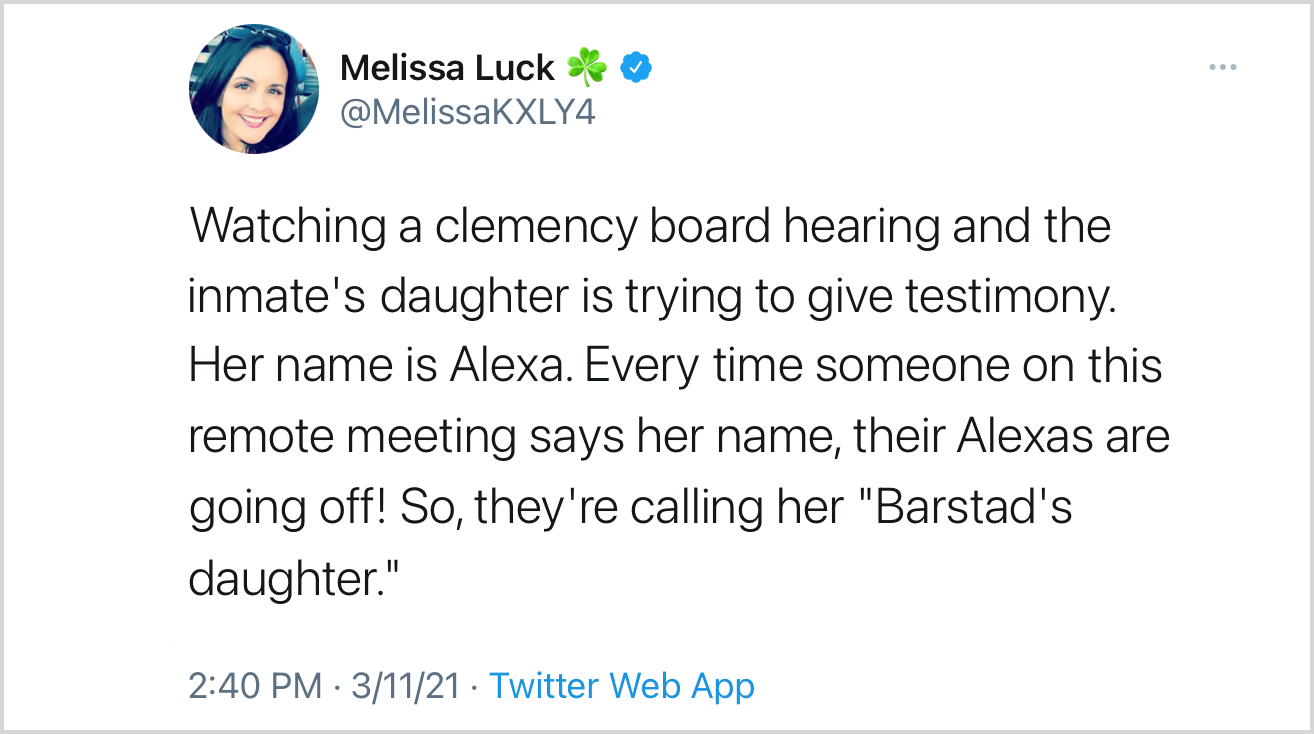

Even though these devices are often called “smart speakers”, they aren’t capable of discerning the difference between a user giving them a command, or someone in the vicinity speaking to (or about) a person named Alexa (and similar names). Unlike what happens when there’s two people sharing a name in the same space, where people have ways to indicate who they’re addressing by adding a last name or initial, or looking in the direction of the person they’re speaking to, the virtual assistant always reacts when it senses its wake word. It’s precisely this mechanism that makes using a human name for its wake word such a disaster.

She who must not be named…

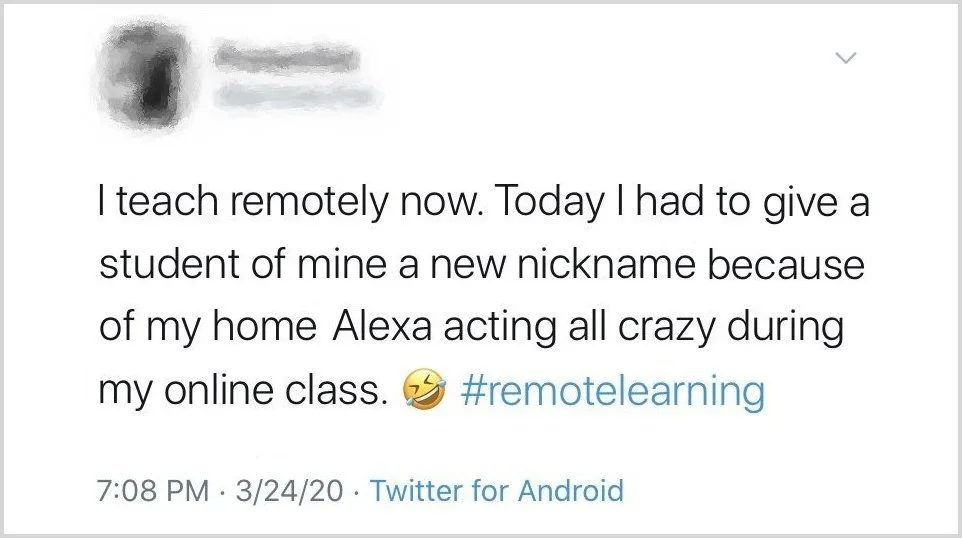

Unfortunately, rather than change wake words (if that’s even possible for the product being used) or turning off devices, many device users fall back on what seems like the easiest “solution” at the time - trying not to refer to that person by their name. This approach may not even be deliberately thought out, but simply the natural result of seeing the chaos that happens otherwise, and wanting to avoid it. Ironically, refusing to call a person by their name dehumanizes them, but it’s precisely their humanity that allows device users to even attempt this approach in the first place. After all, you can’t tell an AI that you will no longer be summoning it by its name when you need it because it simply would cease working. While a human could attempt it, it’s unreasonable and unethical to ask them to do so.

“It’s been decided that she will be referred to as A …”

Another unfortunate method of “solving” the problem of false wakes caused by people’s names is for device users to assign them a new name. Expecting humans to relinquish something as crucial to their identity as their name in order to reserve it for summoning a robot might seem shocking, but that’s exactly what’s been happening to people named Alexa (and similar) in the years since Amazon launched its AI. What’s worse is that this is even happening in situations where the people in charge have been taught the importance of getting peoples’ names right, and avoiding microaggressions, such as classroom settings and workplaces.

Next: Nuisance Name